Profile

|

Dr. Torsten Sattler |

Publications

Scalable 6-DOF Localization on Mobile Devices

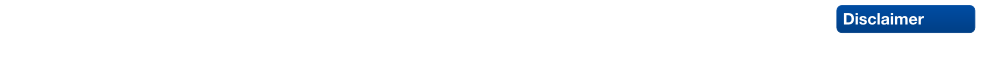

Recent improvements in image-based localization have produced powerful methods that scale up to the massive 3D models emerging from modern Structure-from-Motion techniques. However, these approaches are too resource intensive to run in real-time, let alone to be implemented on mobile devices. In this paper, we propose to combine the scalability of such a global localization system running on a server with the speed and precision of a local pose tracker on a mobile device. Our approach is both scalable and drift-free by design and eliminates the need for loop closure. We propose two strategies to combine the information provided by local tracking and global localization. We evaluate our system on a large-scale dataset of the historic inner city of Aachen where it achieves interactive framerates at a localization error of less than 50cm while using less than 5MB of memory on the mobile device.

The final publication will be available at link.springer.com upon publication.

@inproceedings{middelberg2014eccv,

author = "Middelberg, Sven and Sattler, Torsten and Untzelmann, Ole and Kobbelt, Leif",

title = "{Scalable 6-DOF Localization on Mobile Devices}",

booktitle = "{Proceedings of the 13th European Conference on Computer Vision (ECCV'14)}",

year = 2014

}

SIFT-Realistic Rendering

3D localization approaches establish correspondences between points in a query image and a 3D point cloud reconstruction of the environment. Traditionally, the database models are created from photographs using Structure-from-Motion (SfM) techniques, which requires large collections of densely sampled images. In this paper, we address the question how point cloud data from terrestrial laser scanners can be used instead to significantly reduce the data collection effort and enable more scalable localization.

The key change here is that, in contrast to SfM points, laser-scanned 3D points are not automatically associated with local image features that could be matched to query image features. In order to make this data usable for image-based localization, we explore how point cloud rendering techniques can be leveraged to create virtual views from which database features can be extracted that match real image-based features as closely as possible. We propose different rendering techniques for this task, experimentally quantify how they affect feature repeatability, and demonstrate their benefit for image-based localization.

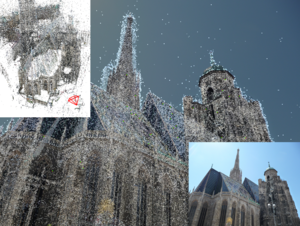

A Scalable Collaborative Online System for City Reconstruction

Recent advances in Structure-from-Motion and Bundle Adjustment allow us to efficiently reconstruct large 3D scenes from millions of images. However, acquiring the imagery necessary to reconstruct a whole city and not only its landmark buildings still poses a tremendous problem. In this paper, we therefore present an online system for collaborative city reconstruction that is based on crowdsourcing the image acquisition. Employing publicly available building footprints to reconstruct individual blocks rather than the whole city at once enables our system to easily scale to large urban environments. In order to map all partial reconstructions into a single coordinate frame, we develop a robust alignment scheme that registers the individual point clouds to their corresponding footprints based on GPS coordinates. Our approach can handle noise and outliers in the GPS positions and allows us to detect wrong alignments caused by the typical issues in the context of crowdsourcing applications such as malicious or improper image uploads. Furthermore, we present an efficient rendering method to obtain dense and textured views of the resulting point clouds without requiring costly multi-view stereo methods

@inproceedings{untzelmann2013iccv,

author = "Untzelmann, Ole and Sattler, Torsten and Middelberg, Sven and Kobbelt, Leif",

title = "{A Scalable Collaborative Online System for City Reconstruction}",

booktitle = "{The IEEE International Conference on Computer Vision (ICCV) Workshops}",

year = {2013}

}

Improving Image-Based Localization by Active Correspondence Search

We propose a powerful pipeline for determining the pose of a query image relative to a point cloud reconstruction of a large scene consisting of more than one million 3D points. The key component of our approach is an efficient and effective search method to establish matches between image features and scene points needed for pose estimation. Our main contribution is a framework for actively searching for additional matches, based on both 2D-to-3D and 3D-to-2D search. A unified formulation of search in both directions allows us to exploit the distinct advantages of both strategies, while avoiding their weaknesses. Due to active search, the resulting pipeline is able to close the gap in registration performance observed between efficient search methods and approaches that are allowed to run for multiple seconds, without sacrificing run-time efficiency. Our method achieves the best registration performance published so far on three standard benchmark datasets, with run-times comparable or superior to the fastest state-of-the-art methods.

The original publication will be available at www.springerlink.com upon publication.

Image Retrieval for Image-Based Localization Revisited

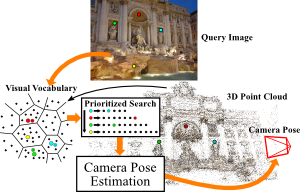

To reliably determine the camera pose of an image relative to a 3D point cloud of a scene, correspondences between 2D features and 3D points are needed. Recent work has demonstrated that directly matching the features against the points outperforms methods that take an intermediate image retrieval step in terms of the number of images that can be localized successfully. Yet, direct matching is inherently less scalable than retrieval-based approaches. In this paper, we therefore analyze the algorithmic factors that cause the performance gap and identify false positive votes as the main source of the gap. Based on a detailed experimental evaluation, we show that retrieval methods using a selective voting scheme are able to outperform state-of-the-art direct matching methods. We explore how both selective voting and correspondence computation can be accelerated by using a Hamming embedding of feature descriptors. Furthermore, we introduce a new dataset with challenging query images for the evaluation of image-based localization.

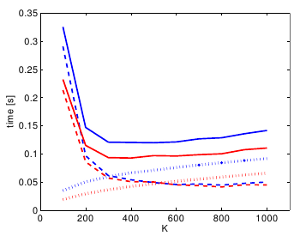

Towards Fast Image-Based Localization on a City-Scale

Recent developments in Structure-from-Motion approaches allow the reconstructions of large parts of urban scenes. The available models can in turn be used for accurate image-based localization via pose estimation from 2D-to-3D correspondences. In this paper, we analyze a recently proposed localization method that achieves state-of-the-art localization performance using a visual vocabulary quantization for efficient 2D-to-3D correspondence search. We show that using only a subset of the original models allows the method to achieve a similar localization performance. While this gain can come at additional computational cost depending on the dataset, the reduced model requires significantly less memory, allowing the method to handle even larger datasets. We study how the size of the subset, as well as the quantization, affect both the search for matches and the time needed by RANSAC for pose estimation.

The original publication will be available at www.springerlink.com upon publication.

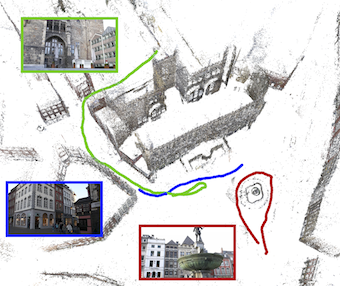

Fast Image-Based Localization using Direct 2D-to-3D Matching

Estimating the position and orientation of a camera given an image taken by it is an important step in many interesting applications such as tourist navigations, robotics, augmented reality and incremental Structure-from-Motion reconstruction. To do so, we have to find correspondences between structures seen in the image and a 3D representation of the scene. Due to the recent advances in the field of Structure-from-Motion it is now possible to reconstruct large scenes up to the level of an entire city in very little time. We can use these results to enable image-based localization of a camera (and its user) on a large scale. However, when processing such large data, the computation between points in the image and points in the model quickly becomes the bottleneck of the localization pipeline. Therefore, it is extremely important to develop methods that are able to effectively and efficiently handle such large environments and that scale well to even larger scenes.

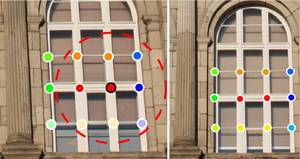

SCRAMSAC: Improving RANSAC's Efficiency with a Spatial Consistency Filter

Geometric verification with RANSAC has become a crucial step for many local feature based matching applications. Therefore, the details of its implementation are directly relevant for an application's run-time and the quality of the estimated results. In this paper, we propose a RANSAC extension that is several orders of magnitude faster than standard RANSAC and as fast as and more robust to degenerate configurations than PROSAC, the currently fastest RANSAC extension from the literature. In addition, our proposed method is simple to implement and does not require parameter tuning. Its main component is a spatial consistency check that results in a reduced correspondence set with a significantly increased inlier ratio, leading to faster convergence of the remaining estimation steps. In addition, we experimentally demonstrate that RANSAC can operate entirely on the reduced set not only for sampling, but also for its consensus step, leading to additional speed-ups. The resulting approach is widely applicable and can be readily combined with other extensions from the literature. We quantitatively evaluate our approach's robustness on a variety of challenging datasets and compare its performance to the state-of-the-art.