Publications

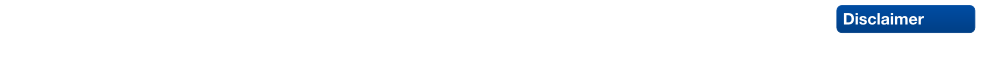

Character Reconstruction and Animation from Uncalibrated Video

We present a novel method to reconstruct 3D character models from video. The main conceptual contribution is that the reconstruction can be performed from a single uncalibrated video sequence which shows the character in articulated motion. We reduce this generalized problem setting to the easier case of multi-view reconstruction of a rigid scene by applying pose synchronization of the character between frames. This is enabled by two central technical contributions. First, based on a generic character shape template, a new mesh-based technique for accurate shape tracking is proposed. This method successfully handles the complex occlusions issues, which occur when tracking the motion of an articulated character. Secondly, we show that image-based 3D reconstruction becomes possible by deforming the tracked character shapes as-rigid-as-possible into a common pose using motion capture data. After pose synchronization, several partial reconstructions can be merged in order to create a single, consistent 3D character model. We integrated these components into a simple interactive framework, which allows for straightforward generation and animation of 3D models for a variety of character shapes from uncalibrated monocular video.

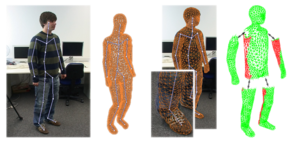

Markerless Reconstruction of Dynamic Facial Expressions

In this paper we combine methods from the field of computer vision with surface editing techniques to generate animated faces, which are all in full correspondence to each other. The input for our system are synchronized video streams from multiple cameras. The system produces a sequence of triangle meshes with fixed connectivity, representing the dynamics of the captured face. By carfully taking all requirements and characteristics into account we decided for the proposed system design: We deform an initial face template using movements estimated from the video streams. To increase the robustness of the initial reconstruction, we use a morphable model as a shape prior. However using an efficient Surfel Fitting technique, we are still able to precisely capture face shapes not part of the PCA Model. In the deformation stage, we use a 2D mesh-based tracking approach to establish correspondences in time. We then reconstruct image-samples in 3D using the same Surfel Fitting technique, and finally use the reconstructed points to robustly deform the initially reconstructed face.

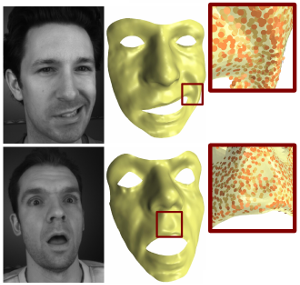

An Intuitive Interface for Interactive High Quality Image-Based Modeling

(Proc. of Pacific Graphics 2009)

We present the design of an interactive image-based modeling tool that enables a user to quickly generate detailed 3D models with texture from a set of calibrated input images. Our main contribution is an intuitive user interface that is entirely based on simple 2D painting operations and does not require any technical expertise by the user or difficult pre-processing of the input images. One central component of our tool is a GPU-based multi-view stereo reconstruction scheme, which is implemented by an incremental algorithm, that runs in the background during user interaction so that the user does not notice any significant response delay.

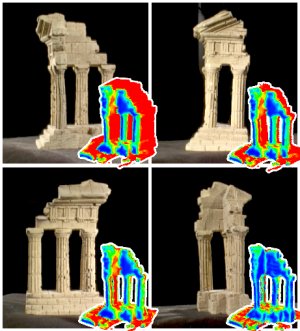

Image Selection For Improved Multi-View Stereo

The Middlebury Multi-View Stereo evaluation clearly shows that the quality and speed of most multi-view stereo algorithms depends significantly on the number and selection of input images. In general, not all input images contribute equally to the quality of the output model, since several images may often contain similar and hence overly redundant visual information. This leads to unnecessarily increased processing times. On the other hand, a certain degree of redundancy can help to improve the reconstruction in more ``difficult'' regions of a model. In this paper we propose an image selection scheme for multi-view stereo which results in improved reconstruction quality compared to uniformly distributed views. Our method is tuned towards the typical requirements of current multi-view stereo algorithms, and is based on the idea of incrementally selecting images so that the overall coverage of a simultaneously generated proxy is guaranteed without adding too much redundant information. Critical regions such as cavities are detected by an estimate of the local photo-consistency and are improved by adding additional views. Our method is highly efficient, since most computations can be out-sourced to the GPU. We evaluate our method with four different methods participating in the Middlebury benchmark and show that in each case reconstructions based on our selected images yield an improved output quality while at the same time reducing the processing time considerably.

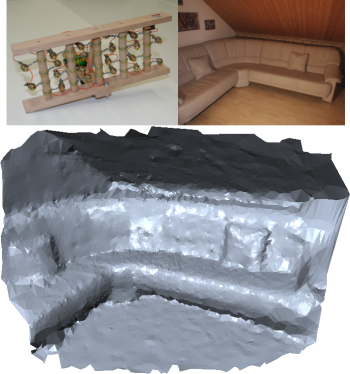

LaserBrush: A Flexible Device for 3D Reconstruction of Indoor Scenes

While many techniques for the 3D reconstruction of small to medium sized objects have been proposed in recent years, the reconstruction of entire scenes is still a challenging task. This is especially true for indoor environments where existing active reconstruction techniques are usually quite expensive and passive, image-based techniques tend to fail due to high scene complexities, difficult lighting situations, or shiny surface materials. To fill this gap we present a novel low-cost method for the reconstruction of depth maps using a video camera and an array of laser pointers mounted on a hand-held rig. Similar to existing laser-based active reconstruction techniques, our method is based on a fixed camera, moving laser rays and depth computation by triangulation. However, unlike traditional methods, the position and orientation of the laser rig does not need to be calibrated a-priori and no precise control is necessary during image capture. The user rather moves the laser rig freely through the scene in a brush-like manner, letting the laser points sweep over the scene's surface. We do not impose any constraints on the distribution of the laser rays, the motion of the laser rig, or the scene geometry except that in each frame at least six laser points have to be visible. Our main contributions are two-fold. The first is the depth map reconstruction technique based on irregularly oriented laser rays that, by exploiting robust sampling techniques, is able to cope with missing and even wrongly detected laser points. The second is a smoothing operator for the reconstructed geometry specifically tailored to our setting that removes most of the inevitable noise introduced by calibration and detection errors without damaging important surface features like sharp edges.

Robust Reconstruction of Watertight 3D Models from Non-uniformly Sampled Point Clouds Without Normal Information

We present a new volumetric method for reconstructing watertight triangle meshes from arbitrary, unoriented point clouds. While previous techniques usually reconstruct surfaces as the zero level-set of a signed distance function, our method uses an unsigned distance function and hence does not require any information about the local surface orientation. Our algorithm estimates local surface confidence values within a dilated crust around the input samples. The surface which maximizes the global confidence is then extracted by computing the minimum cut of a weighted spatial graph structure. We present an algorithm, which efficiently converts this cut into a closed, manifold triangle mesh with a minimal number of vertices. The use of an unsigned distance function avoids the topological noise artifacts caused by misalignment of 3D scans, which are common to most volumetric reconstruction techniques. Due to a hierarchical approach our method efficiently produces solid models of low genus even for noisy and highly irregular data containing large holes, without loosing fine details in densely sampled regions. We show several examples for different application settings such as model generation from raw laser-scanned data, image-based 3D reconstruction, and mesh repair.

Iterative Multi-View Plane Fitting

We present a method for the reconstruction of 3D planes from calibrated 2D images. Given a set of pixels Ω in a reference image, our method computes a plane which best approximates that part of the scene which has been projected to Ω by exploiting additional views. Based on classical image alignment techniques we derive linear matching equations minimally parameterized by the three parameters of an object-space plane. The resulting iterative algorithm is highly robust because it is able to integrate over large image regions due to the correct object-space approximation and hence is not limited to comparing small image patches. Our method can be applied to a pair of stereo images but is also able to take advantage of the additional information provided by an arbitrary number of input images. A thorough experimental validation shows that these properties enable robust convergence especially under the influence of image sensor noise and camera calibration errors.

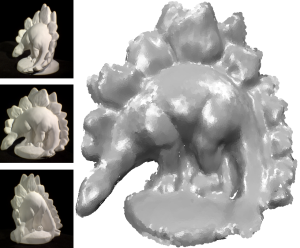

Hierarchical Volumetric Multi-view Stereo Reconstruction of Manifold Surfaces based on Dual Graph Embedding

This paper presents a new volumetric stereo algorithm to reconstruct the 3D shape of an arbitrary object. Our method is based on finding the minimum cut in an octahedral graph structure embedded into the vol umetric grid, which establishes a well defined relationship between the integrated photo-consistency function of a region in space and the corresponding edge weights of the embedded graph. This new graph structure allows for a highly efficient hierarchical implementation supporting high volumetric resolutions and large numbers of input images. Furthermore we will show how the resulting cut surface can be directly converted into a consistent, closed and manifold mesh. Hence this work provides a complete multi-view stereo reconstruction pipeline. We demonstrate the robustness and efficiency of our technique by a number of high quality reconstructions of real objects.

Robust and Efficient Photo-Consistency Estimation for Volumetric 3D Reconstruction

Estimating photo-consistency is one of the most important ingredients for any 3D stereo reconstruction technique that is based on a volumetric scene representation. This paper presents a new, illumination invariant photo-consistency measure for high quality, volumetric 3D reconstruction from calibrated images. In contrast to current standard methods such as normalized cross-correlation it supports unconstrained camera setups and non-planar surface approximations. We show how this measure can be embedded into a highly efficient, completely hardware accelerated volumetric reconstruction pipeline by exploiting current graphics processors. We provide examples of high quality reconstructions with computation times of only a few seconds to minutes, even for large numbers of cameras and high volumetric resolutions.