Profile

|

Patric Schmitz, M.Sc. |

Presentations

Event |

Type |

Title |

|---|---|---|

| Thrill of Deception. From Ancient Art to Virtual Reality Ludwig Forum Aachen 2019 |

Demo | Virtual Walk Through the Aachen Cathedral |

| Mensch und Computer 2018 | Demo | Real Walking in Virtual Spaces: Visiting the Aachen Cathedral |

| IEEE VR Conference 2018 | Paper | You Spin my Head Right Round: Threshold of Limited Immersion for Rotation Gains in Redirected Walking |

| VR in Industry Conference 2017 | Talk | Valuable Assistance in Immersive Virtual Environments: Virtual Agents and Redirected Walking |

| ICT Projekthaus Workshop 2017 | Talk | Getting Around in Cyberspace: The Perception of Space in Virtual Reality |

Publications

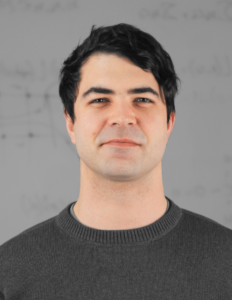

Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior

Immersive authoring enables content creation for virtual environments without a break of immersion. To enable immersive authoring of reactive behavior for a broad audience, we present modulation mapping, a simplified visual programming technique. To evaluate the applicability of our technique, we investigate the role of reference frames in which the programming elements are positioned, as this can affect the user experience. Thus, we developed two interface layouts: "surround-referenced" and "object-referenced". The former positions the programming elements relative to the physical tracking space, and the latter relative to the virtual scene objects. We compared the layouts in an empirical user study (n = 34) and found the surround-referenced layout faster, lower in task load, less cluttered, easier to learn and use, and preferred by users. Qualitative feedback, however, revealed the object-referenced layout as more intuitive, engaging, and valuable for visual debugging. Based on the results, we propose initial design implications for immersive authoring of reactive behavior by visual programming. Overall, modulation mapping was found to be an effective means for creating reactive behavior by the participants.

Honorable Mention for Best Paper!

» Show BibTeX

@inproceedings{eroglu2024choose,

title={Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior},

author={Eroglu, Sevinc and Schmitz, Patric and Sinke, Kilian and Anders, David and Kuhlen, Torsten Wolfgang and Weyers, Benjamin},

booktitle={30th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2024}

}

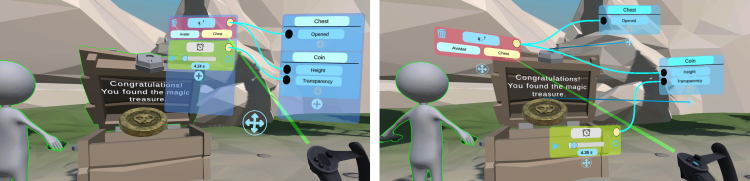

Interactive Segmentation of Textured Point Clouds

We present a method for the interactive segmentation of textured 3D point clouds. The problem is formulated as a minimum graph cut on a k-nearest neighbor graph and leverages the rich information contained in high-resolution photographs as the discriminative feature. We demonstrate that the achievable segmentation accuracy is significantly improved compared to using an average color per point as in prior work. The method is designed to work efficiently on large datasets and yields results at interactive rates. This way, an interactive workflow can be realized in an immersive virtual environment, which supports the segmentation task by improved depth perception and the use of tracked 3D input devices. Our method enables to create high-quality segmentations of textured point clouds fast and conveniently.

» Show BibTeX

@inproceedings {10.2312:vmv.20221200,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Interactive Segmentation of Textured Point Clouds}},

author = {Schmitz, Patric and Suder, Sebastian and Schuster, Kersten and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221200}

}

Compression and Rendering of Textured Point Clouds via Sparse Coding

Splat-based rendering techniques produce highly realistic renderings from 3D scan data without prior mesh generation. Mapping high-resolution photographs to the splat primitives enables detailed reproduction of surface appearance. However, in many cases these massive datasets do not fit into GPU memory. In this paper, we present a compression and rendering method that is designed for large textured point cloud datasets. Our goal is to achieve compression ratios that outperform generic texture compression algorithms, while still retaining the ability to efficiently render without prior decompression. To achieve this, we resample the input textures by projecting them onto the splats and create a fixed-size representation that can be approximated by a sparse dictionary coding scheme. Each splat has a variable number of codeword indices and associated weights, which define the final texture as a linear combination during rendering. For further reduction of the memory footprint, we compress geometric attributes by careful clustering and quantization of local neighborhoods. Our approach reduces the memory requirements of textured point clouds by one order of magnitude, while retaining the possibility to efficiently render the compressed data.

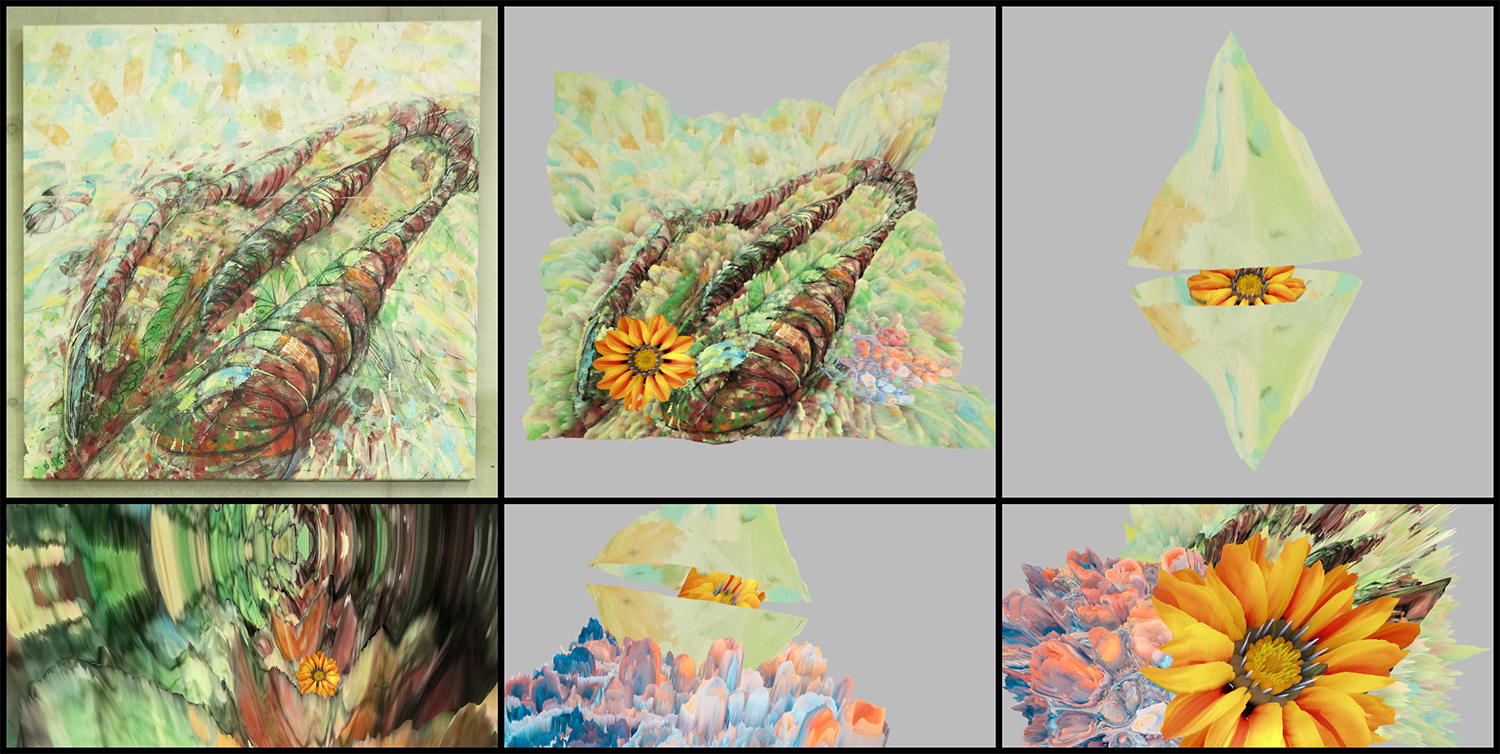

Rilievo: Artistic Scene Authoring via Interactive Height Map Extrusion in VR

The authors present a virtual authoring environment for artistic creation in VR. It enables the effortless conversion of 2D images into volumetric 3D objects. Artistic elements in the input material are extracted with a convenient VR-based segmentation tool. Relief sculpting is then performed by interactively mixing different height maps. These are automatically generated from the input image structure and appearance. A prototype of the tool is showcased in an analog-virtual artistic workflow in collaboration with a traditional painter. It combines the expressiveness of analog painting and sculpting with the creative freedom of spatial arrangement in VR.

@article{eroglu2020rilievo,

title={Rilievo: Artistic Scene Authoring via Interactive Height Map Extrusion in VR},

author={Eroglu, Sevinc and Schmitz, Patric and Martinez, Carlos Aguilera and Rusch, Jana and Kobbelt, Leif and Kuhlen, Torsten W},

journal={Leonardo},

volume={53},

number={4},

pages={438--441},

year={2020},

publisher={MIT Press}

}

High-Fidelity Point-Based Rendering of Large-Scale 3D Scan Datasets

Digitalization of 3D objects and scenes using modern depth sensors and high-resolution RGB cameras enables the preservation of human cultural artifacts at an unprecedented level of detail. Interactive visualization of these large datasets, however, is challenging without degradation in visual fidelity. A common solution is to fit the dataset into available video memory by downsampling and compression. The achievable reproduction accuracy is thereby limited for interactive scenarios, such as immersive exploration in Virtual Reality (VR). This degradation in visual realism ultimately hinders the effective communication of human cultural knowledge. This article presents a method to render 3D scan datasets with minimal loss of visual fidelity. A point-based rendering approach visualizes scan data as a dense splat cloud. For improved surface approximation of thin and sparsely sampled objects, we propose oriented 3D ellipsoids as rendering primitives. To render massive texture datasets, we present a virtual texturing system that dynamically loads required image data. It is paired with a single-pass page prediction method that minimizes visible texturing artifacts. Our system renders a challenging dataset in the order of 70 million points and a texture size of 1.2 terabytes consistently at 90 frames per second in stereoscopic VR.

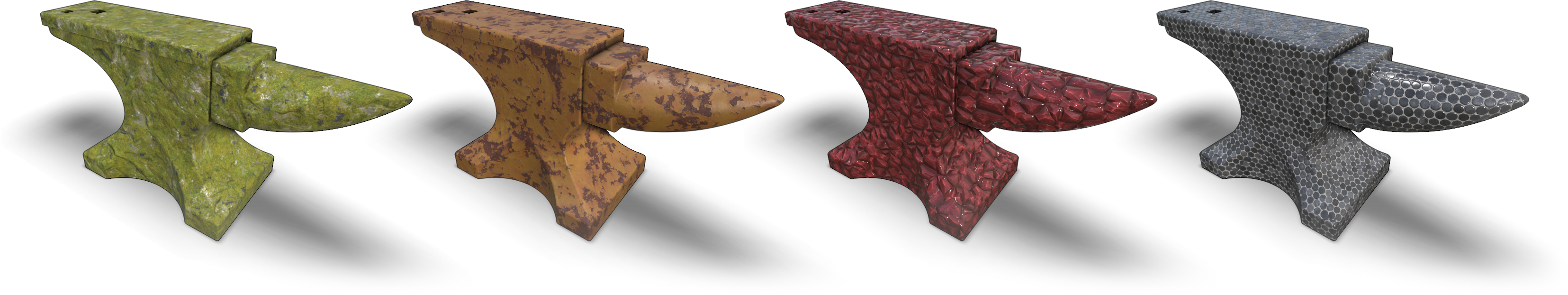

A Three-Level Approach to Texture Mapping and Synthesis on 3D Surfaces

We present a method for example-based texturing of triangular 3D meshes. Our algorithm maps a small 2D texture sample onto objects of arbitrary size in a seamless fashion, with no visible repetitions and low overall distortion. It requires minimal user interaction and can be applied to complex, multi-layered input materials that are not required to be tileable. Our framework integrates a patch-based approach with per-pixel compositing. To minimize visual artifacts, we run a three-level optimization that starts with a rigid alignment of texture patches (macro scale), then continues with non-rigid adjustments (meso scale) and finally performs pixel-level texture blending (micro scale). We demonstrate that the relevance of the three levels depends on the texture content and type (stochastic, structured, or anisotropic textures).

@article{schuster2020,

author = {Schuster, Kersten and Trettner, Philip and Schmitz, Patric and Kobbelt, Leif},

title = {A Three-Level Approach to Texture Mapping and Synthesis on 3D Surfaces},

year = {2020},

issue_date = {Apr 2020},

publisher = {The Association for Computers in Mathematics and Science Teaching},

address = {USA},

volume = {3},

number = {1},

url = {https://doi.org/10.1145/3384542},

doi = {10.1145/3384542},

journal = {Proc. ACM Comput. Graph. Interact. Tech.},

month = apr,

articleno = {1},

numpages = {19},

keywords = {material blending, surface texture synthesis, texture mapping}

}

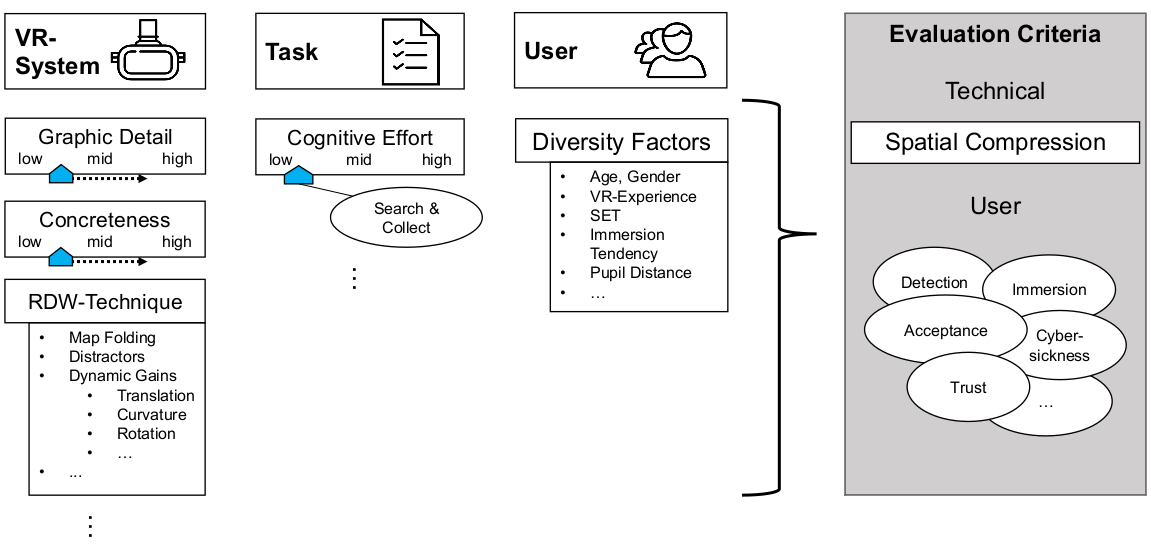

You Spin my Head Right Round: Threshold of Limited Immersion for Rotation Gains in Redirected Walking

In virtual environments, the space that can be explored by real walking is limited by the size of the tracked area. To enable unimpeded walking through large virtual spaces in small real-world surroundings, redirection techniques are used. These unnoticeably manipulate the user’s virtual walking trajectory. It is important to know how strongly such techniques can be applied without the user noticing the manipulation—or getting cybersick. Previously, this was estimated by measuring a detection threshold (DT) in highly-controlled psychophysical studies, which experimentally isolate the effect but do not aim for perceived immersion in the context of VR applications. While these studies suggest that only relatively low degrees of manipulation are tolerable, we claim that, besides establishing detection thresholds, it is important to know when the user’s immersion breaks. We hypothesize that the degree of unnoticed manipulation is significantly different from the detection threshold when the user is immersed in a task. We conducted three studies: a) to devise an experimental paradigm to measure the threshold of limited immersion (TLI), b) to measure the TLI for slowly decreasing and increasing rotation gains, and c) to establish a baseline of cybersickness for our experimental setup. For rotation gains greater than 1.0, we found that immersion breaks quite late after the gain is detectable. However, for gains lesser than 1.0, some users reported a break of immersion even before established detection thresholds were reached. Apparently, the developed metric measures an additional quality of user experience. This article contributes to the development of effective spatial compression methods by utilizing the break of immersion as a benchmark for redirection techniques.

Fluid Sketching — Immersive Sketching Based on Fluid Flow

Fluid artwork refers to works of art based on the aesthetics of fluid motion, such as smoke photography, ink injection into water, and paper marbling. Inspired by such types of art, we created Fluid Sketching as a novel medium for creating 3D fluid artwork in immersive virtual environments. It allows artists to draw 3D fluid-like sketches and manipulate them via six degrees of freedom input devices. Different sets of brush strokes are available, varying different characteristics of the fluid. Because of fluid's nature, the diffusion of the drawn fluid sketch is animated, and artists have control over altering the fluid properties and stopping the diffusion process whenever they are satisfied with the current result. Furthermore, they can shape the drawn sketch by directly interacting with it, either with their hand or by blowing into the fluid. We rely on particle advection via curl-noise as a fast procedural method for animating the fluid flow.

» Show BibTeX

@InProceedings{Eroglu2018,

author = {Eroglu, Sevinc and Gebhardt, Sascha and Schmitz, Patric and Rausch, Dominik and Kuhlen, Torsten Wolfgang},

title = {{Fluid Sketching — Immersive Sketching Based on Fluid Flow}},

booktitle = {Proceedings of IEEE Virtual Reality Conference 2018},

year = {2018}

}

Get Well Soon! Human Factors’ Influence on Cybersickness after Redirected Walking Exposure in Virtual Reality

Cybersickness poses a crucial threat to applications in the domain of Virtual Reality. Yet, its predictors are insufficiently explored when redirection techniques are applied. Those techniques let users explore large virtual spaces by natural walking in a smaller tracked space. This is achieved by unnoticeably manipulating the user’s virtual walking trajectory. Unfortunately, this also makes the application more prone to cause Cybersickness. We conducted a user study with a semi-structured interview to get quantitative and qualitative insights into this domain. Results show that Cybersickness arises, but also eases ten minutes after the exposure. Quantitative results indicate that a tolerance towards Cybersickness might be related to self-efficacy constructs and therefore learnable or trainable, while qualitative results indicate that users’ endurance of Cybersickness is dependent on symptom factors such as intensity and duration, as well as factors of usage context and motivation. The role of Cybersickness in Virtual Reality environments is discussed in terms of the applicability of redirected walking techniques.

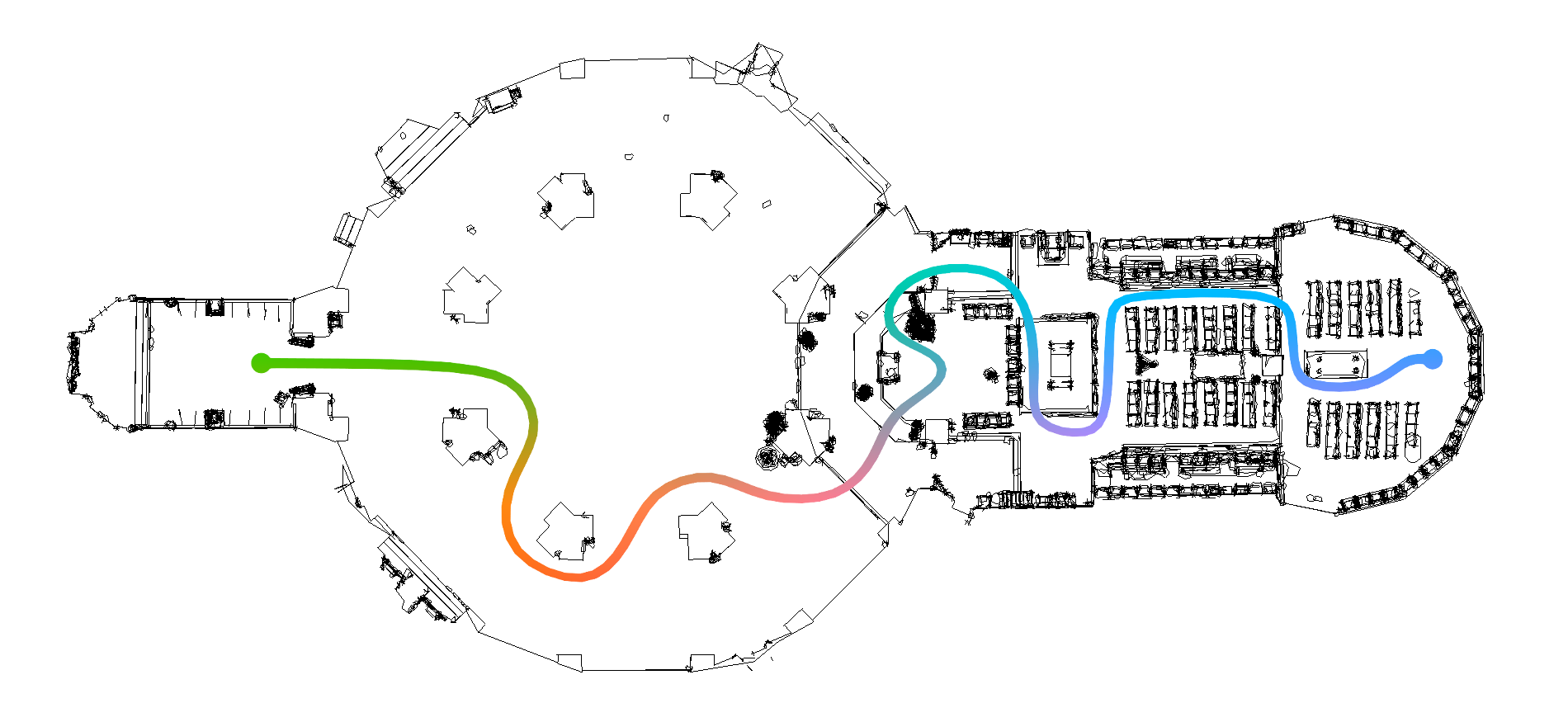

Real Walking in Virtual Spaces: Visiting the Aachen Cathedral

Real walking is the most natural and intuitive way to navigate the world around us. In Virtual Reality, the limited tracking area of commercially available systems typically does not match the size of the virtual environment we wish to explore. Spatial compression methods enable the user to walk further in the virtual environment than the real tracking bounds permit. This demo gives a glimpse into our ongoing research on spatial compression in VR. Visitors can walk through a realistic model of the Aachen Cathedral within a room-sized tracking area.

» Show BibTeX

@article{schmitz2018real,

title={Real Walking in Virtual Spaces: Visiting the Aachen Cathedral},

author={Schmitz, Patric and Kobbelt, Leif},

journal={Mensch und Computer 2018-Workshopband},

year={2018},

publisher={Gesellschaft f{\"u}r Informatik eV}

}

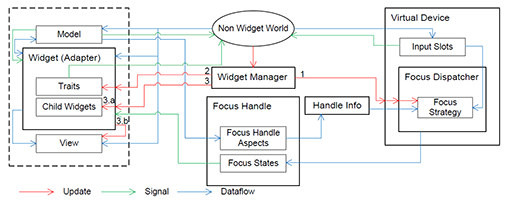

Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks

Virtual Reality (VR) has been an active field of research for several decades, with 3D interaction and 3D User Interfaces (UIs) as important sub-disciplines. However, the development of 3D interaction techniques and in particular combining several of them to construct complex and usable 3D UIs remains challenging, especially in a VR context. In addition, there is currently only limited reusable software for implementing such techniques in comparison to traditional 2D UIs. To overcome this issue, we present ViSTA Widgets, a software framework for creating 3D UIs for immersive virtual environments. It extends the ViSTA VR framework by providing functionality to create multi-device, multi-focus-strategy interaction building blocks and means to easily combine them into complex 3D UIs. This is realized by introducing a device abstraction layer along sophisticated focus management and functionality to create novel 3D interaction techniques and 3D widgets. We present the framework and illustrate its effectiveness with code and application examples accompanied by performance evaluations.

@InProceedings{Gebhardt2016,

Title = {{Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks}},

Author = {Gebhardt, Sascha and Petersen-Krau, Till and Pick, Sebastian and Rausch, Dominik and Nowke, Christian and Knott, Thomas and Schmitz, Patric and Zielasko, Daniel and Hentschel, Bernd and Kuhlen, Torsten W.},

Booktitle = {Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology},

Year = {2016},

Address = {New York, NY, USA},

Pages = {251--260},

Publisher = {ACM},

Series = {VRST '16},

Acmid = {2993382},

Doi = {10.1145/2993369.2993382},

ISBN = {978-1-4503-4491-3},

Keywords = {3D interaction, 3D user interfaces, framework, multi-device, virtual reality},

Location = {Munich, Germany},

Numpages = {10},

Url = {http://doi.acm.org/10.1145/2993369.2993382}

}