Data Driven 3D Face Tracking Based on a Facial Deformation Model

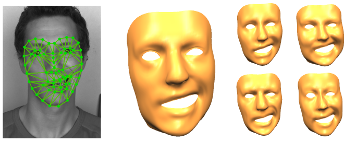

We introduce a new markerless 3D face tracking approach for 2D video streams captured by a single consumer grade camera. Our approach is based on tracking 2D features in the video and matching them with the projection of the corresponding feature points of a deformable 3D model. By this we estimate the initial shape and pose of the face. To make the tracking and reconstruction more robust we add a smoothness prior for pose changes as well as for deformations of the faces. Our major contribution lies in the formulation of the smooth deformation prior which we derive from a large database of previously captured facial animations showing different (dynamic) facial expressions of a fairly large number of subjects. We split these animation sequences into snippets of fixed length which we use to predict the facial motion based on previous frames. In order to keep the deformation model compact and independent from the individual physiognomy, we represent it by deformation gradients (instead of vertex positions) and apply a principal component analysis in deformation gradient space to extract the major modes of facial deformation. Since the facial deformation is optimized during tracking, it is particularly easy to apply them to other physiognomies and thereby re-target the facial expressions. We demonstrate the effectiveness of our technique on a number of examples.

VMV 2015 Honorable Mention