Profile

|

Publications

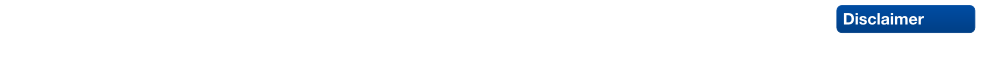

Interactive Segmentation of Textured Point Clouds

We present a method for the interactive segmentation of textured 3D point clouds. The problem is formulated as a minimum graph cut on a k-nearest neighbor graph and leverages the rich information contained in high-resolution photographs as the discriminative feature. We demonstrate that the achievable segmentation accuracy is significantly improved compared to using an average color per point as in prior work. The method is designed to work efficiently on large datasets and yields results at interactive rates. This way, an interactive workflow can be realized in an immersive virtual environment, which supports the segmentation task by improved depth perception and the use of tracked 3D input devices. Our method enables to create high-quality segmentations of textured point clouds fast and conveniently.

» Show BibTeX

@inproceedings {10.2312:vmv.20221200,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Interactive Segmentation of Textured Point Clouds}},

author = {Schmitz, Patric and Suder, Sebastian and Schuster, Kersten and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221200}

}

Compression and Rendering of Textured Point Clouds via Sparse Coding

Splat-based rendering techniques produce highly realistic renderings from 3D scan data without prior mesh generation. Mapping high-resolution photographs to the splat primitives enables detailed reproduction of surface appearance. However, in many cases these massive datasets do not fit into GPU memory. In this paper, we present a compression and rendering method that is designed for large textured point cloud datasets. Our goal is to achieve compression ratios that outperform generic texture compression algorithms, while still retaining the ability to efficiently render without prior decompression. To achieve this, we resample the input textures by projecting them onto the splats and create a fixed-size representation that can be approximated by a sparse dictionary coding scheme. Each splat has a variable number of codeword indices and associated weights, which define the final texture as a linear combination during rendering. For further reduction of the memory footprint, we compress geometric attributes by careful clustering and quantization of local neighborhoods. Our approach reduces the memory requirements of textured point clouds by one order of magnitude, while retaining the possibility to efficiently render the compressed data.

Highly accurate digital traffic recording as a basis for future mobility research: Methods and concepts of the research project HDV-Mess

The research project HDV-Mess aims at a currently missing, but very crucial component for addressing important challenges in the field of connected and automated driving on public roads. The goal is to record traffic events at various relevant locations with high accuracy and to collect real traffic data as a basis for the development and validation of current and future sensor technologies as well as automated driving functions. For this purpose, it is necessary to develop a concept for a mobile modular system of measuring stations for highly accurate traffic data acquisition, which enables a temporary installation of a sensor and communication infrastructure at different locations. Within this paper, we first discuss the project goals before we present our traffic detection concept using mobile modular intelligent transport systems stations (ITS-Ss). We then explain the approaches for data processing of sensor raw data to refined trajectories, data communication, and data validation.

@article{DBLP:journals/corr/abs-2106-04175,

author = {Laurent Kloeker and

Fabian Thomsen and

Lutz Eckstein and

Philip Trettner and

Tim Elsner and

Julius Nehring{-}Wirxel and

Kersten Schuster and

Leif Kobbelt and

Michael Hoesch},

title = {Highly accurate digital traffic recording as a basis for future mobility

research: Methods and concepts of the research project HDV-Mess},

journal = {CoRR},

volume = {abs/2106.04175},

year = {2021},

url = {https://arxiv.org/abs/2106.04175},

eprinttype = {arXiv},

eprint = {2106.04175},

timestamp = {Fri, 11 Jun 2021 11:04:16 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2106-04175.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

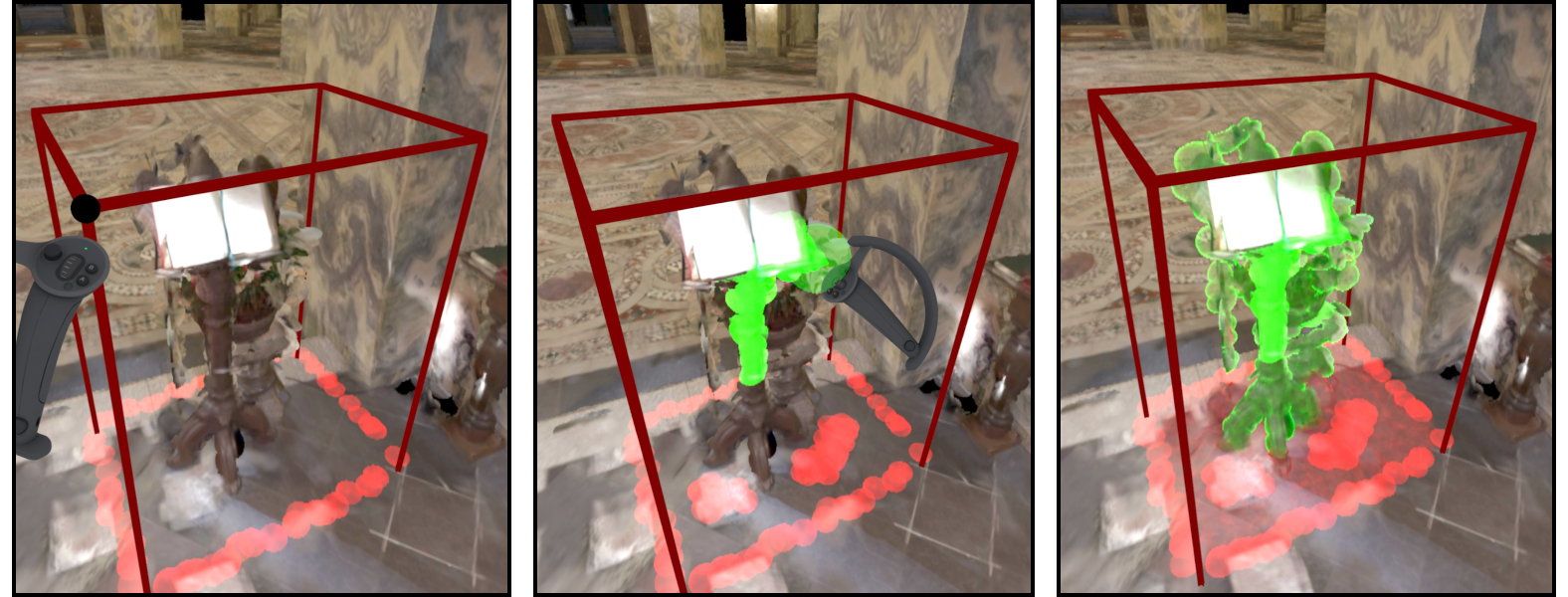

High-Performance Image Filters via Sparse Approximations

We present a numerical optimization method to find highly efficient (sparse) approximations for convolutional image filters. Using a modified parallel tempering approach, we solve a constrained optimization that maximizes approximation quality while strictly staying within a user-prescribed performance budget. The results are multi-pass filters where each pass computes a weighted sum of bilinearly interpolated sparse image samples, exploiting hardware acceleration on the GPU. We systematically decompose the target filter into a series of sparse convolutions, trying to find good trade-offs between approximation quality and performance. Since our sparse filters are linear and translation-invariant, they do not exhibit the aliasing and temporal coherence issues that often appear in filters working on image pyramids. We show several applications, ranging from simple Gaussian or box blurs to the emulation of sophisticated Bokeh effects with user-provided masks. Our filters achieve high performance as well as high quality, often providing significant speed-up at acceptable quality even for separable filters. The optimized filters can be baked into shaders and used as a drop-in replacement for filtering tasks in image processing or rendering pipelines.

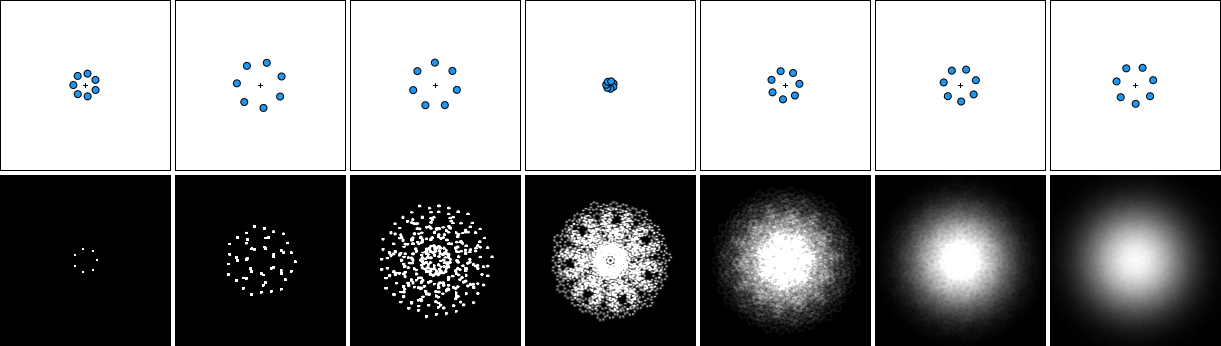

A Three-Level Approach to Texture Mapping and Synthesis on 3D Surfaces

We present a method for example-based texturing of triangular 3D meshes. Our algorithm maps a small 2D texture sample onto objects of arbitrary size in a seamless fashion, with no visible repetitions and low overall distortion. It requires minimal user interaction and can be applied to complex, multi-layered input materials that are not required to be tileable. Our framework integrates a patch-based approach with per-pixel compositing. To minimize visual artifacts, we run a three-level optimization that starts with a rigid alignment of texture patches (macro scale), then continues with non-rigid adjustments (meso scale) and finally performs pixel-level texture blending (micro scale). We demonstrate that the relevance of the three levels depends on the texture content and type (stochastic, structured, or anisotropic textures).

@article{schuster2020,

author = {Schuster, Kersten and Trettner, Philip and Schmitz, Patric and Kobbelt, Leif},

title = {A Three-Level Approach to Texture Mapping and Synthesis on 3D Surfaces},

year = {2020},

issue_date = {Apr 2020},

publisher = {The Association for Computers in Mathematics and Science Teaching},

address = {USA},

volume = {3},

number = {1},

url = {https://doi.org/10.1145/3384542},

doi = {10.1145/3384542},

journal = {Proc. ACM Comput. Graph. Interact. Tech.},

month = apr,

articleno = {1},

numpages = {19},

keywords = {material blending, surface texture synthesis, texture mapping}

}