Publications

SIFT-Realistic Rendering

3D localization approaches establish correspondences between points in a query image and a 3D point cloud reconstruction of the environment. Traditionally, the database models are created from photographs using Structure-from-Motion (SfM) techniques, which requires large collections of densely sampled images. In this paper, we address the question how point cloud data from terrestrial laser scanners can be used instead to significantly reduce the data collection effort and enable more scalable localization.

The key change here is that, in contrast to SfM points, laser-scanned 3D points are not automatically associated with local image features that could be matched to query image features. In order to make this data usable for image-based localization, we explore how point cloud rendering techniques can be leveraged to create virtual views from which database features can be extracted that match real image-based features as closely as possible. We propose different rendering techniques for this task, experimentally quantify how they affect feature repeatability, and demonstrate their benefit for image-based localization.

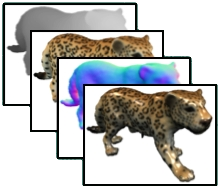

High-Quality Surface Splatting on Today's GPUs

Because of their conceptual simplicity and superior flexibility, point-based geometries evolved into a valuable alternative to surface representations based on polygonal meshes. Elliptical surface splats were shown to allow for high-quality anti-aliased rendering by sophisticated EWA filtering. Since the publication of the original software-based EWA splatting, several authors tried to map this technique to the GPU in order to exploit hardware acceleration. Due to the lacking support for splat primitives, these methods always have to find a trade-off between rendering quality and rendering performance. In this paper, we discuss the capabilities of today's GPUs for hardware-accelerated surface splatting. We present an approach that achieves a quality comparable to the original EWA splatting at a rate of more than 20M elliptical splats per second. In contrast to previous GPU renderers, our method provides per-pixel Phong shading even for dynamically changing geometries and high-quality anti-aliasing by employing a screen-space pre-filter in addition to the object-space reconstruction filter. The use of deferred shading techniques effectively avoids unnecessary shader computations and additionally provides a clear separation between the rasterization and the shading of elliptical splats, which considerably simplifies the development of custom shaders. We demonstrate quality, efficiency, and flexibility of our approach by showing several shaders on a range of models.

Phong Splatting

Surface splatting has developed into a valuable alternative to triangle meshes when it comes to rendering of highly detailed massive datasets. However, even highly accurate splat approximations of the given geometry may sometimes not provide a sufficient rendering quality since surface lighting mostly depends on normal vectors whose deviation is not bounded by the Hausdorff approximation error. Moreover, current point-based rendering systems usually associate a constant normal vector with each splat, leading to rendering results which are comparable to flat or Gouraud shading for polygon meshes. In contrast, we propose to base the lighting of a splat on a linearly varying normal field associated with it, and we show that the resulting Phong Splats provide a visual quality which is far superior to existing approaches. We present a simple and effective way to construct a Phong splat representation for a given set of input samples. Our surface splatting system is implemented completely based on vertex and pixel shaders of current GPUs and achieves a splat rate of up to 4M Phong shaded, filtered, and blended splats per second. In contrast to previous work, our scan conversion is projectively correct per pixel, leading to more accurate visualization and clipping at sharp features.

Efficient high quality rendering of point sampled geometry

We propose a highly efficient hierarchical representation for point sampled geometry that automatically balances sampling density and point coordinate quantization. The representation is very compact with a memory consumption of far less than 2 bits per point position which does not depend on the quantization precision. We present an efficient rendering algorithm that exploits the hierarchical structure of the representation to perform fast 3D transformations and shading. The algorithm is extended to surface splatting which yields high quality anti-aliased and water tight surface renderings. Our pure software implementation renders up to 14 million Phong shaded and textured samples per second and about 4 million anti-aliased surface splats on a commodity PC. This is more than a factor 10 times faster than previous algorithms.